AI feels smart, sometimes even human. It can write essays, plan trips, explain quantum physics… but ask it what you talked about yesterday, and it draws a blank. That’s because most AI today has no memory.

Real AI agents need persistent memory to learn from experience, adapt to user preferences, and build relationships over time. To do that, they need a true long-term memory system; one that can store and recall what matters.

This article breaks down what AI memory really is, why LLMs don’t have it, what kinds of memory agents need, and how Memvid gives you a reliable and portable AI memory system in a single file.

What Is AI Memory? And Why Memvid Makes It Real?

When we talk about “AI memory,” it’s easy to imagine something very human: a system that remembers what we said and uses that information later. But most AI today doesn’t actually do that, at least not by default.

To understand why memory matters (and how Memvid solves it), we first need to unpack what memory really is.

So… what is memory, really?

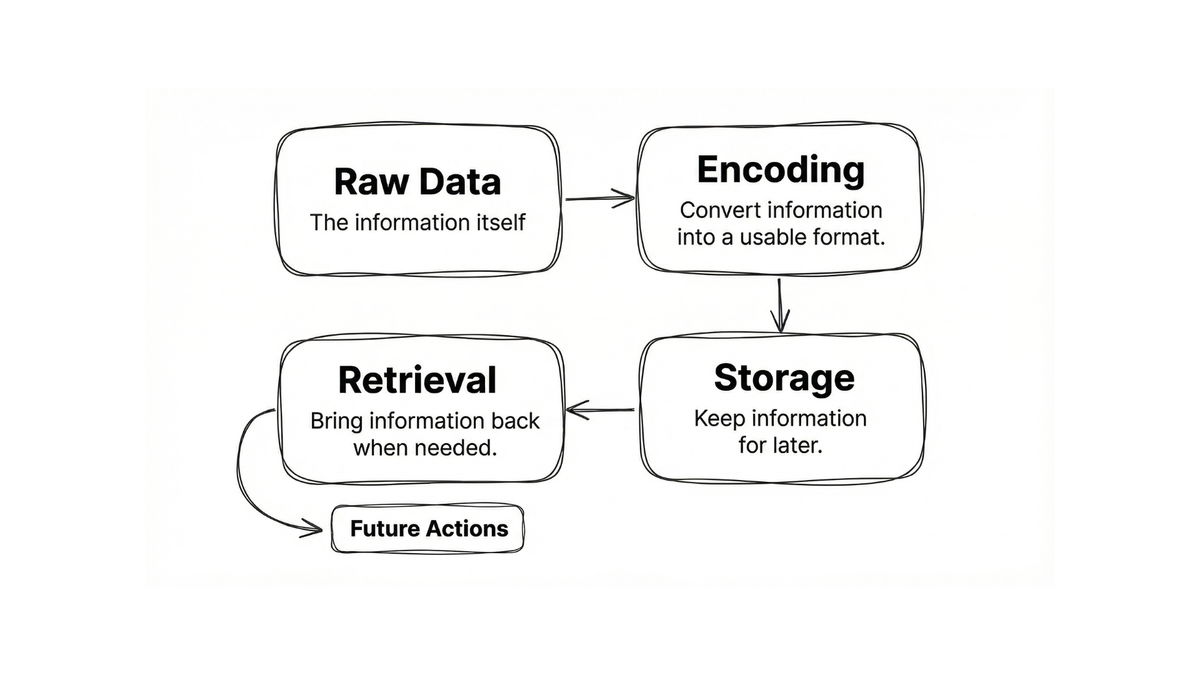

Let’s borrow the classic definition from cognitive science: Memory is the ability to encode, store, and retrieve information so it can influence future actions.

But there is a common misconception. Many believe memory = information. But memory is NOT just information stored somewhere. It is a system composed of multiple components:

- Raw Data: The information itself

- Encoding: Transforming data into a usable format

- Storage: Where the information lives long-term

- Retrieval: How information is recalled and applied

Without all four, you don’t have memory — you just have data lying around.

Therefore the correct formula is: Memory = Raw Data + Encoding + Storage + Retrieval

Humans do this instinctively. AI developers must build these systems intentionally.

Why AI agents forget everything

Large Language Models (LLMs) are stateless by design meaning they don’t have persistent memory. Every prompt is a blank slate unless the developer adds previous chat history manually.

They learn during training and store knowledge in their weights (called parametric memory). But:

- They don’t remember what you said earlier in the conversation

- Every chat is a fresh start

- History only exists if the developer manually includes it

That’s why any AI application claiming “persistent memory” is really adding external memory, a system outside the model that tracks what should be remembered.

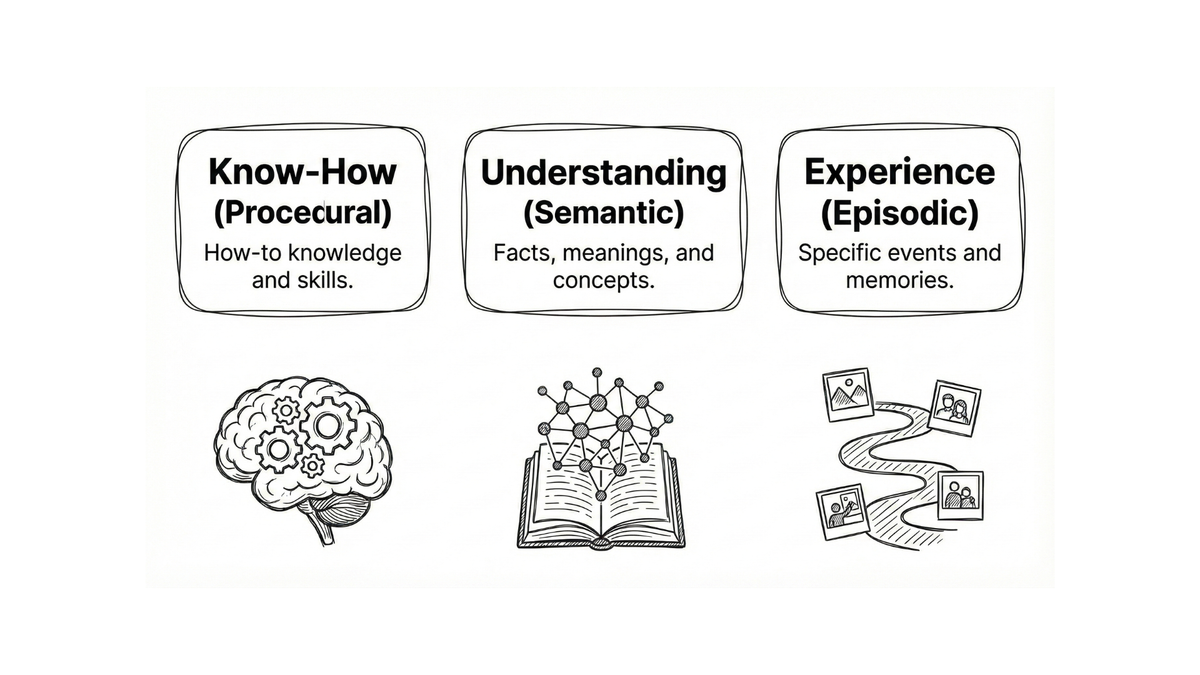

Types of AI memory

Cognitive science has studied human memory for decades, and those frameworks translate beautifully into AI.

1 - Procedural Memory (Know-How)

What the agent is fundamentally capable of doing

Every human learns core abilities they never consciously think about again — like riding a bike or speaking their native language. These skills become “automatic.”

AI has an equivalent: Its built-in behavior, defined by the model’s training and the agent’s programming.

It’s the memory of how to think:

- how to structure a response

- how to call tools

- how to follow instructions

This knowledge is deeply embedded and rarely changed in everyday use. Agents don’t rewrite their neural weights to learn a new skill mid-conversation — at least not in 2025.

This memory answers: How should I operate? It’s durable, foundational, and normally off-limits for real-time updates.

2- Semantic Memory (Understanding)

What the agent knows about the world (and about you)

This is the memory of concepts, definitions, and long-lived facts; like knowing Paris is the capital of France, or that your teammate prefers Flutter over React Native.

It’s what powers:

- personalization

- preference tracking

- domain expertise

Instead of simply focusing on the current turn, the agent can say: “Oh, you mentioned earlier you’re a huge Mexico fan for the 2026 World Cup. Want me to track their qualifiers and match schedule for you?”

This creates a feeling of partnership; the agent remembers what matters.

This memory answers: What do I now know that should shape the future? It is constantly evolving as the agent observes and learns.

3- Episodic Memory (Experience)

Replayable snapshots of what has happened before

This is the timeline of a relationship between user and agent, the “episodes” they’ve lived together:

- Past chats

- Successful workflows

- Choices that worked (or failed)

This helps an agent stay consistent overtime.

Instead of saying: “Can you share that document again?" It can say: “I already have your design brief from last week, want me to use that version?”

Experience memory turns a stateless chatbot into an AI that remembers its history with you. It keeps context alive across days, months, or tasks.

How the three work together

Know-How (Procedural Memory)

• Think of it as: Skills

• Helps the agent: Behave correctly

Understanding (Semantic Memory)

• Think of it as: Knowledge

• Helps the agent: Personalize responses

Experience (Episodic Memory)

• Think of it as: History

• Helps the agent: Maintain continuity

When any one of these is missing, the agent feels noticeably incomplete:

• Without Know-How → broken

• Without Understanding → generic

• Without Experience → forgetful

A truly smart system needs all three.

Memory is application-specific

There is no universal list of what an agent should remember. What matters depends on the job the agent is doing.

Example: A coding agent should store:

- Libraries you like

- Documentation for your libraries

- File conventions

- Preferred languages

- Previous errors and solutions

A research agent should store:

- Topics you investigate

- People and companies mentioned

- Your conclusions and interests

A personal writing assistant should store:

- Tone, slang, formatting rules

- Past documents and context

- Personal facts (with permission)

If an agent tries to remember everything, it becomes noisy and brittle. If it remembers nothing, it feels frustrating and robotic.

Well-designed long-term memory should be tailored to what the agent actually needs to remember.

Why this matters now

As AI shifts from one-off interactions to ongoing collaboration, memory becomes the differentiator:

- Tools that remember users will win.

- Tools that forget will feel obsolete.

But building these memory systems usually means: databases + indexing services + embeddings + cloud sync + ops headaches. All just to remember what should have been obvious.

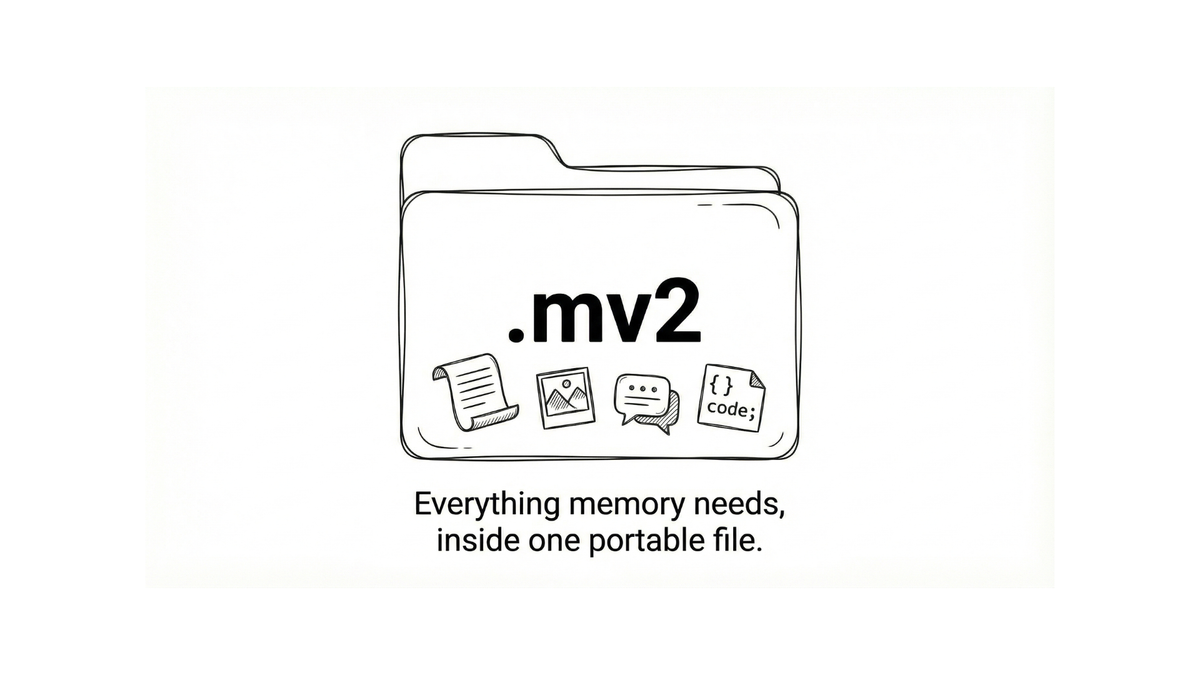

This is exactly the problem Memvid was built to eliminate.

The bottom line

If agents are going to grow from clever chatbots into true collaborators, they must remember, not just what was said moments ago, but the knowledge, preferences, and history that make each user and business unique.

Yet today, building AI memory means stitching together databases, embeddings, services, and cloud infrastructure… just to keep track of the basics.

Memvid takes a radically simpler path: a complete AI memory system in a single portable file that replaces complex RAG. No servers. No ops. No cloud dependency. Just one memory file you can ship, copy, version, encrypt, and run anywhere.

With Memvid, your agent doesn’t start over every time. It learns. It evolves. It remembers what matters, so it can finally feel like a real partner.